NVIDIA Unleashes The First Jetson AGX Orin Module

[ad_1]

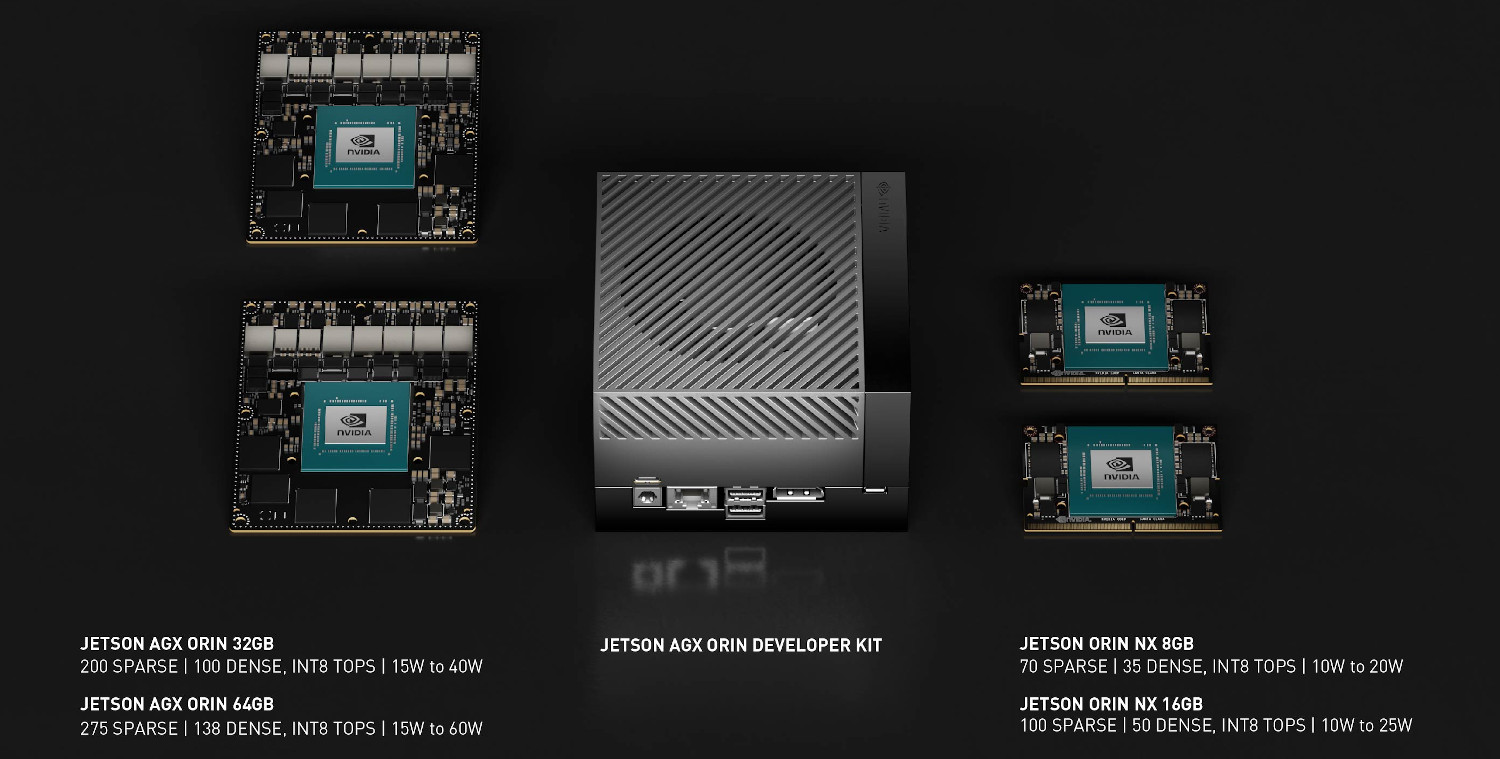

Back in March, NVIDIA released Jetson Orin, the upcoming-generation of their ARM single-board pcs meant for edge computing purposes. The new platform promised to deliver “server-class AI performance” on a board little enough to install in a robotic or IoT gadget, with even the lowest tier of Orin modules providing roughly double the overall performance of the previous Jetson Xavier modules. Regretably, there was a little bit of a capture — at the time, Orin was only offered in improvement package kind.

But right now, NVIDIA has declared the speedy availability of the Jetson AGX Orin 32GB output module for $999 USD. This is fundamentally the mid-array supplying of the Orin line, which helps make releasing it initially a logical sufficient alternative. End users who will need the leading-stop overall performance of the 64GB variant will have to hold out till November, but there’s even now no hard release date for the smaller sized NX Orin SO-DIMM modules.

That’s a bit of a letdown for people like us, considering that the two SO-DIMM modules are possibly the most appealing for hackers and makers. At $399 and $599, their pricing can make them much more palatable for the unique experimenter, when their smaller dimensions and additional acquainted interface ought to make them easier to carry out into Diy builds. When the Jetson Nano is however an unbeatable bargain for all those hunting to dip their toes into the CUDA waters, we could surely see individuals investing in the significantly additional strong NX Orin boards for much more complex projects.

Though the AGX Orin modules could be a little bit steep for the ordinary tinkerer, their availability is however something to be psyched about. Thanks to the widespread JetPack SDK framework shared by the Jetson household of boards, applications developed for these increased-stop modules will mainly stay appropriate across the whole product or service line. Guaranteed, the less expensive and older Jetson boards will run them slower, but as significantly as device learning and AI programs go, they’ll even now operate circles all over a thing like the Raspberry Pi.

[ad_2]

Source connection