Deep Learning’s Little-Known Debt to The Innovator’s Dilemma

[ad_1]

In 1997, Harvard Enterprise School professor Clayton Christensen established a sensation among venture capitalists and business people with his e book The Innovator’s Problem. The lesson that most people bear in mind from it is that a effectively-operate business enterprise just can’t find the money for to change to a new approach—one that finally will switch its existing company model—until it is also late.

One particular of the most popular illustrations of this conundrum associated images. The huge, pretty lucrative firms that made movie for cameras knew in the mid-1990s that electronic photography would be the long run, but there was hardly ever really a superior time for them to make the change. At just about any issue they would have lost cash. So what transpired, of course, was that they have been displaced by new organizations making electronic cameras. (Certainly, Fujifilm did endure, but the changeover was not very, and it involved an inconceivable collection of situations, machinations, and radical variations.)

A 2nd lesson from Christensen’s e book is less perfectly remembered but is an integral aspect of the tale. The new businesses springing up could get by for a long time with a disastrously less capable engineering. Some of them, nevertheless, survive by discovering a new area of interest they can fill that the incumbents cannot. That is where they quietly mature their capabilities.

For example, the early electronic cameras had considerably lower resolution than movie cameras, but they were being also a great deal scaled-down. I utilized to have just one on my important chain in my pocket and choose shots of the individuals in every single meeting I had. The resolution was way also minimal to file breathtaking holiday vacation vistas, but it was superior ample to augment my very poor memory for faces.

This lesson also applies to investigate. A wonderful instance of an underperforming new solution was the next wave of neural networks in the course of the 1980s and 1990s that would sooner or later revolutionize synthetic intelligence starting off around 2010.

Neural networks of different sorts had been researched as mechanisms for equipment discovering since the early 1950s, but they weren’t incredibly great at learning attention-grabbing factors.

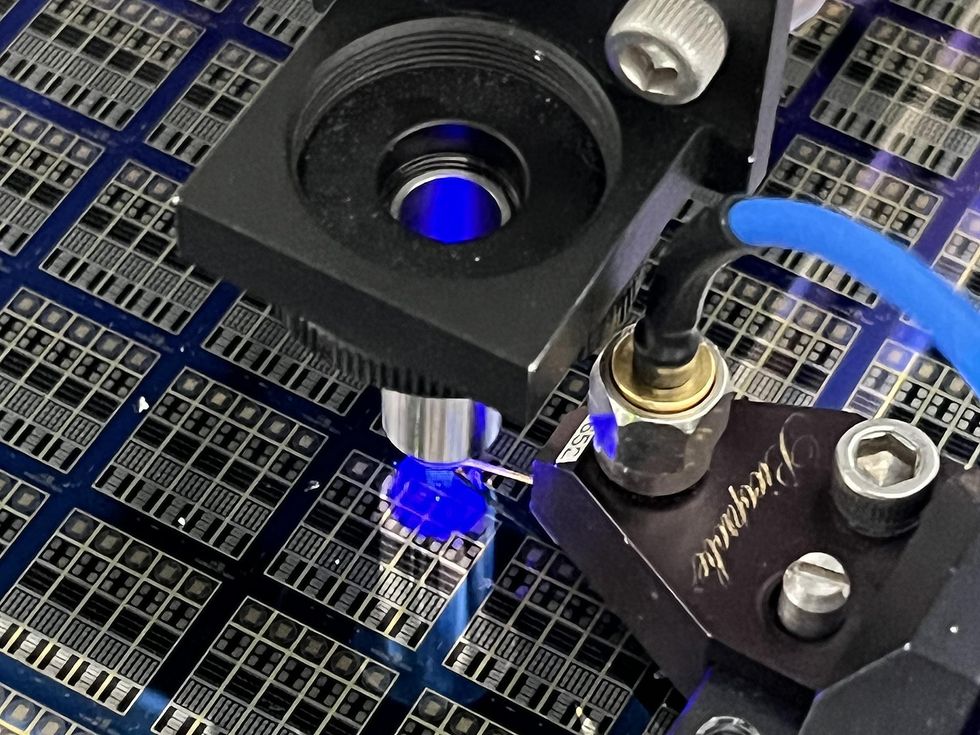

In 1979, Kunihiko Fukushima initial published his study on one thing he termed change-invariant neural networks, which enabled his self-arranging networks to study to classify handwritten digits anywhere they were being in an impression. Then, in the 1980s, a system known as backpropagation was rediscovered it permitted for a type of supervised understanding in which the community was instructed what the ideal remedy should really be. In 1989, Yann LeCun put together backpropagation with Fuksuhima’s strategies into something that has arrive to be recognized as convolutional neural networks (CNNs). LeCun, too, concentrated on pictures of handwritten digits.

In 2012, the poor cousin of pc eyesight triumphed, and it fully transformed the area of AI.

Above the subsequent 10 yrs, the U.S. National Institute of Specifications and Technological know-how (NIST) arrived up with a databases, which was modified by LeCun, consisting of 60,000 coaching digits and 10,000 test digits. This standard check database, named MNIST, allowed researchers to precisely evaluate and compare the efficiency of different enhancements to CNNs. There was a good deal of development, but CNNs were no match for the entrenched AI techniques in laptop vision when used to arbitrary pictures generated by early self-driving vehicles or industrial robots.

But all through the 2000s, far more and additional studying methods and algorithmic improvements had been additional to CNNs, major to what is now recognized as deep mastering. In 2012, all of a sudden, and seemingly out of nowhere, deep studying outperformed the conventional pc eyesight algorithms in a set of take a look at pictures of objects, recognized as ImageNet. The weak cousin of computer system vision triumphed, and it wholly changed the area of AI.

A compact quantity of persons experienced labored for decades and stunned absolutely everyone. Congratulations to all of them, each perfectly identified and not so well regarded.

But beware. The message of Christensen’s e-book is that these disruptions never ever prevent. People standing tall currently will be shocked by new strategies that they have not begun to think about. There are modest groups of renegades seeking all types of new points, and some of them, way too, are inclined to labor quietly and against all odds for a long time. A single of those teams will someday shock us all.

I enjoy this component of technological and scientific disruption. It is what helps make us people wonderful. And perilous.

This short article appears in the July 2022 print difficulty as “The Other Aspect of The Innovator’s Dilemma.”

[ad_2]

Resource website link